“This is not your father’s ETL. This is not your mother’s message bus. This is not your uncle’s application integration”

– Rich Dill commenting on the need to think differently about application and data integration with SnapLogic

This week I sat down with Rich Dill, one of SnapLogic’s data integration gurus who has over twenty years of experience in the data management and data warehousing world. Rich talked about the leap forward in terms of ease-of-use and user enablement that an integration platform as a service (iPaaS) can provide. He also commented on the latency differences and when it comes to moving data from one cloud to another or from a cloud to a data center, compared to moving data from one application to a data warehouse in a data center.

This week I sat down with Rich Dill, one of SnapLogic’s data integration gurus who has over twenty years of experience in the data management and data warehousing world. Rich talked about the leap forward in terms of ease-of-use and user enablement that an integration platform as a service (iPaaS) can provide. He also commented on the latency differences and when it comes to moving data from one cloud to another or from a cloud to a data center, compared to moving data from one application to a data warehouse in a data center.

“When using a new technology people tend to use old approaches. And without training what happens is they are not able to take advantage of the features and capabilities of the new technology. It’s the old adage of putting the square peg in a round hole.”

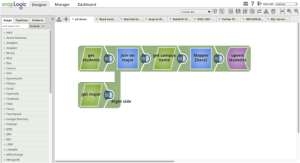

To highlight the power of how SnapLogic brings together multiple styles of integration in a single platform, Rich put together this demonstration, where he creates a data flow, called a pipeline, that is focused on a classic extract, transform and load (ETL) use case and goes much further. Here’s a summary of SnapLogic consuming, transforming and delivering data.

Part 1: ETL as a Service

- Rich selects data from two databases, explaining how you can preview data and view it in multiple formats as it flows through the platform.

- He reviews how SnapLogic processes JSON documents, which gives the platform the ability to loosely couple the structure of the integration job to the target, and goes on to perform inner and outer Joins before formatting the output and writing the joined data to a File Writer.

- Then he goes back and adds a SQL Server Lookup to get additional information.

- He runs the pipeline and creates a version of it.

Part 2: Managing Change (Ever get asked to add a few more columns in a database and then have to change your data integration task?)

- He goes in and modifies the underlying SQL table.

- He re-runs the SnapLogic pipeline and shows the new results, without having to make a change. This highlights the flexibility and adaptability of the SnapLogic Elastic Integration Platform.

Part 3: Salesforce Data Loading

- He brings in the Data Mapper Snap to map data to what’s in Salesforce.

- He drags and drops the Salesforce Upsert Snap and determines if he should use the REST or Bulk API.

- He uses SmartLink to do a fuzzy search and map the input and output fields.

- He reviews the Expression Editor to highlight the kinds of data transformations that are possible.

- He shows how data is now inserted into Salesforce and saves this version of the pipeline.

Part 4: RESTful Pipelines

- He removes the Data Mapper Snap because the output will be different and he brings in the JSON Formatter.

- Here, Rich takes a minute to review how not only is SnapLogic a loosely-coupled document model, but it’s also 100% REST-based. This means that each pipeline is abstracted and, as he puts it, “addressable, usable, consumable, trigger-able, schedule-able as a REST call.”

- He goes to Manager > Tasks and creates a new Task and sets it to Trigger.

- He executes the Task to demonstrate that, when calling the REST-based pipeline, it can then show how a mobile device can do a REST Get to bring the data into a mobile device.

- He wraps up the demonstration by changing the pipeline from a JSON document output to an XML document.

I’ve embedded the video below. Be sure to check out our Resource center for other information about SnapLogic’s Elastic Integration Platform. Thanks for a great demo Rich!