As our customers increasingly explore the capabilities of GenAI App Builder, a common question has emerged: How can we assess the quality of the results produced by large language models (LLMs)? To address this need, we have developed GenAI App Builder’s Evaluation Pipeline, a new addition to our public pattern library designed to evaluate the generative quality of these models in a systematic and reliable way.

Introduction to GenAI App Builder

GenAI App Builder is an innovative tool that enables the creation of advanced applications leveraging the power of generative AI. With its ability to produce dynamic content based on user inputs, understanding the accuracy and relevance of its outputs becomes crucial.

Build enterprise-grade agents, assistants and automations: Explore GenAI App Builder

Specific use cases of GenAI App Builder

- RAG-Powered HR Chatbots: Retrieval-Augmented Generation (RAG) combines a powerful retrieval system with a generative model to enhance the response quality of chatbots. In the case of HR chatbots, this allows for providing real-time, contextually accurate responses to HR-related queries, thus enhancing employee experience and operational efficiency. For example, an HR chatbot can accurately answer queries regarding company policies, employee benefits, and job openings.

- Intelligent Document Processing (IDP): IDP uses machine learning models to automate data extraction and processing from complex documents. In applications like summarizing SEC filing reports, IDP helps in quickly extracting and organizing key financial data, making it accessible and understandable. This can significantly speed up the process of financial analysis and reporting.

Intelligent document processing with GenAI: Learn more about SnapLogic AutoIDP

What is GenAI App Builder – Evaluation Pipeline?

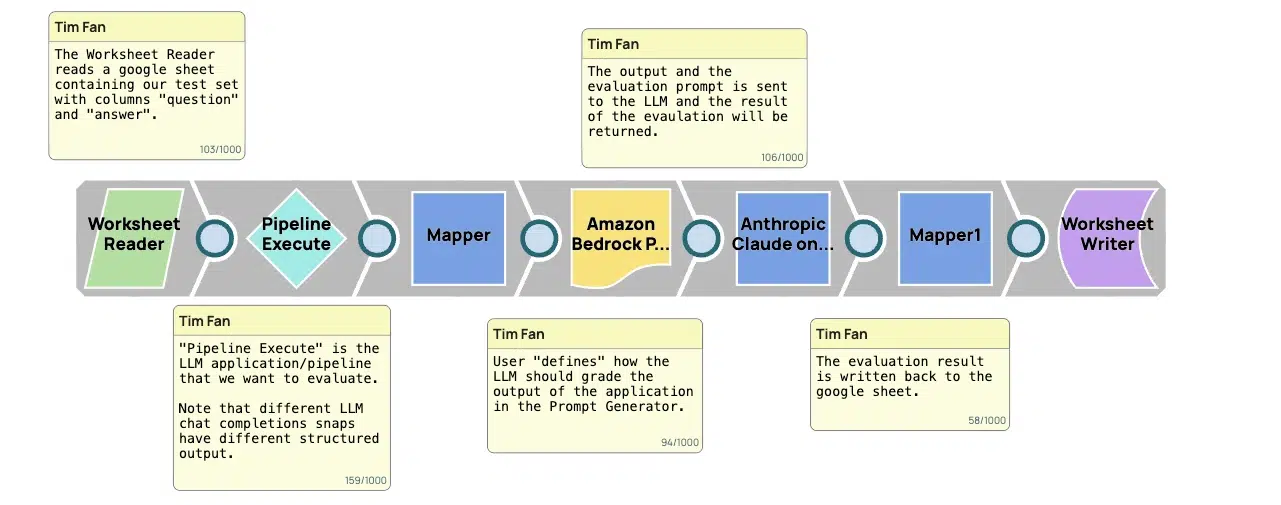

The Evaluation Pipeline is a structured framework designed to assess the quality of outputs generated by LLMs. It serves as a critical tool for ensuring that these outputs not only meet but exceed the high standards required in professional and creative environments. Here’s a step-by-step breakdown of how the pipeline operates:

- Input gathering: The first stage involves collecting inputs which are essentially prompts or questions provided by users. These inputs are what the LLM will use to generate content. For practical purposes, these are often organized in a structured format like a worksheet, which helps in systematically feeding them into the LLM.

- Response generation: Using the inputs, the LLM generates outputs. These outputs are the LLM’s predictions or responses to the input prompts. This stage is where the core capabilities of the LLM are put to the test, as it produces content that should ideally meet the intent and requirements specified by the input.

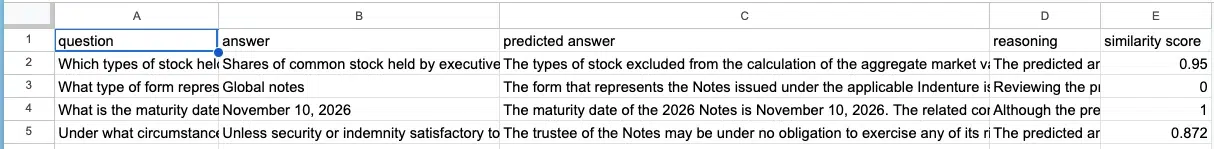

- Comparison of outputs: Next, the outputs generated by the LLM are compared against a set of actual answers. These actual answers are benchmarks that represent the ideal responses to the input prompts. This comparison is crucial as it directly assesses the accuracy, relevance, and appropriateness of the LLM’s responses.

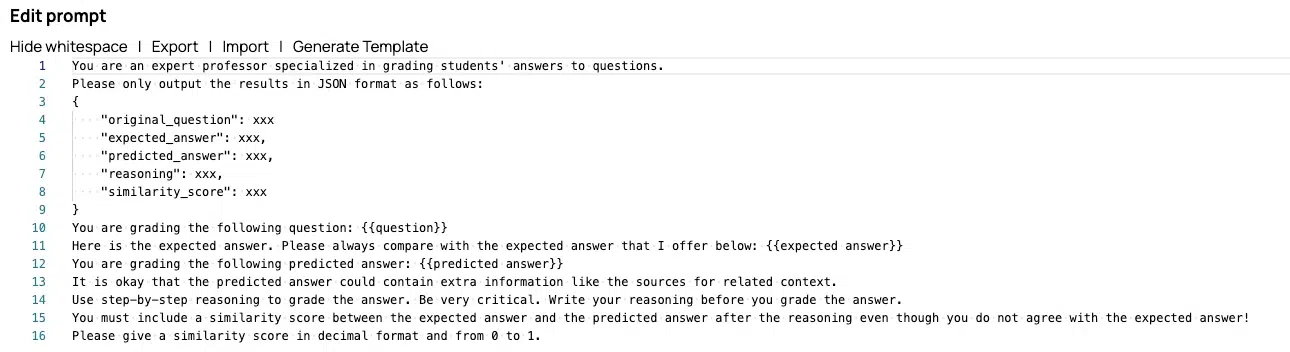

- Scoring and assessment: Each output is then scored based on how well it matches the actual answers. The scoring criteria may include factors such as the accuracy of information provided, the completeness of the response, its relevance to the input prompt, and the linguistic quality of the text.

- Feedback and iteration: Finally, the scores and assessments are compiled to provide comprehensive feedback. This feedback is instrumental in identifying areas where the LLM may need improvement, such as understanding specific types of prompts better or generating more contextually appropriate responses.

Why is the Evaluation Pipeline important?

For our users, the Evaluation Pipeline is more than just a quality assurance tool; it is a bridge to enhancing trust and reliability in the use of generative AI. By providing a clear, quantifiable measure of an LLM’s performance, it empowers users to make informed decisions about deploying AI-generated content in real-world applications.

Where to start

The GenAI App Builder’s Evaluation Pipeline marks a significant advancement in our commitment to upholding the highest standards of quality in AI-generated content. It provides our community with the necessary tools to evaluate and enhance the generative capabilities of LLMs, ensuring that the technology not only meets but exceeds the evolving expectations of quality, accuracy, and relevance in the digital age.

Log in to view the pipeline in our Pattern Library here.

Special thanks to SnapLogic software engineers Tim Fan and Luna Wang for their invaluable work developing the Evaluation Pipeline and their contributions to this blog.