There has been a lot of discussion about data being the new currency. As Forbes Technology Council member Rob Versaw wrote in his blog post, “How To Implement The New Currency: Data,” “Companies with the most potential to grow and snag market share use data more efficiently than their competitors.” The potential of gaining business value from data is absolutely there, but getting the data in the right location at the right time and in the right format is key to realizing the potential. Simply taking all available data and storing it in a data lake has been shown to have mixed success.1 Movement of applications and data to the cloud is already occurring and continues to evolve. Big data storage and processing is sure to follow a similar trend. How can enterprises set themselves up for successful data hydration?

Obviously, enterprises today have embraced the cloud and fully utilize a broad set of SaaS applications across many lines of business (LoBs). Applications such as Salesforce, Workday, and Zendesk hold key information when looking to uncover business insight. Streaming data from IoT sensors provides valuable information about the health of equipment. While social media streaming data provides real-time information about how customers perceive your offerings. But, to get the most business value out of data, you need to ingest and combine data from many sources, be that via the SaaS vendors and streaming sources mentioned above, or more traditional sources such as on-premises business data (DBMS). By augmenting primary data sources with additional internal and external data sources, companies can unlock a potential to discover new insights.

Tapping the cloud

Along with the SaaS movement, data storage in the cloud is now the new norm – whether you’re using DBMS in the cloud such as Snowflake, Amazon Redshift, Microsoft Azure SQL data warehouse, as well as object storage in services such as Amazon S3, Microsoft WASB, etc. Cloud storage removes the need for IT to create backups and data replication since these tasks are part of the cloud service offering, thus reducing the cost and complexity of managing IT resources. Cloud storage also provides a cost-effective way to store and manage enterprise data, allowing enterprise IT to become more agile.

Processing complex data sets

While Hadoop and HDFS have provided the technology for enterprises to be able to store and process large, complex data sets, this environment requires a specialized skill set. In addition, these Hadoop clusters have historically been on-premises systems that require a large capital investment upfront to get started. Data lakes and big data processing are following the cloud movement by moving to the cloud. The first phase is referred to as “lift and shift,” where enterprises simply move the on-premises Hadoop cluster to a cloud provider running in a virtual network, taking advantage of IaaS such as cost and ease of scaling. However, in this phase, clusters, still managed by the enterprise, don’t address the skill-set gap.

The next phase of big data processing means moving the processing to a managed Hadoop as a service (HaaS) environment such as Amazon EMR, Microsoft HDInsight, Cloudera Altus, Hortonworks Data cloud, etc. These managed services have the advantage of freeing the enterprises from the complexity of managing and maintaining Hadoop environments. These managed Hadoop services can also make use of underlying cloud storage systems such as Amazon S3 and Microsoft ADLS, making it easier to ingest and deliver the data without having to move/copy the data to HDFS.

Making data lake hydration and processing easier

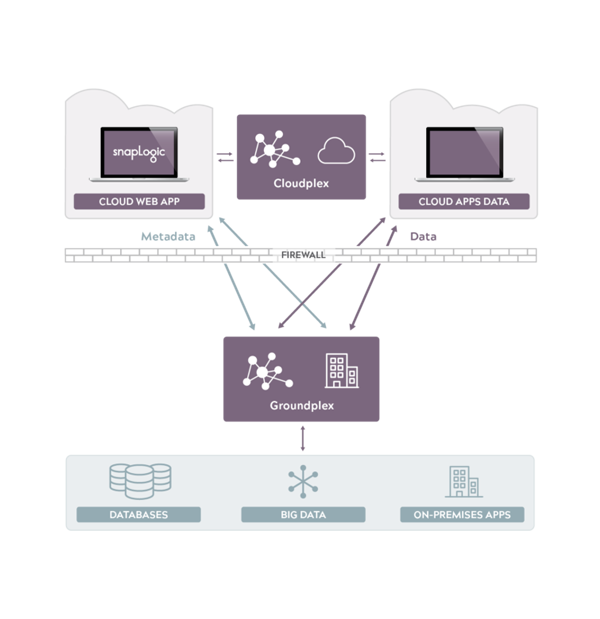

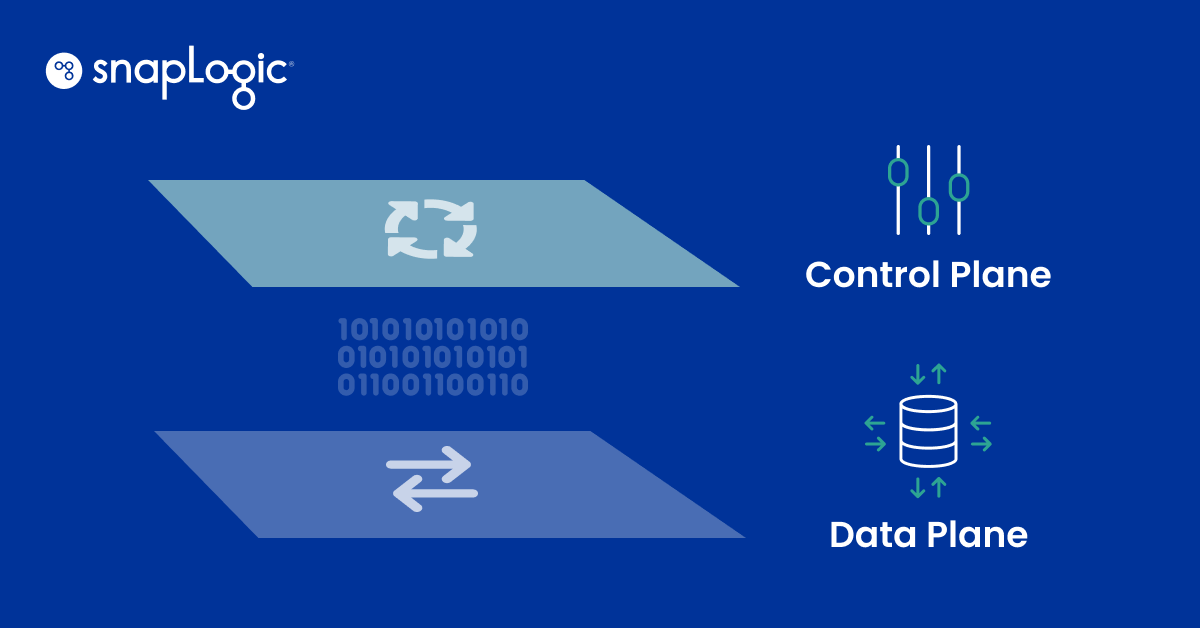

SnapLogic’s hybrid integration platform provides the means to enact data hydration from a comprehensive set of endpoints be it in the cloud or on-premises. Our unique Snaplex architecture is designed to exchange data across complex and evolving hybrid enterprise landscape.

To assist in closing the skills gap associated with working with big data through data hydration, connecting to endpoints is as easy as dragging and dropping. We connect to the endpoints through the use of connectors called Snaps. We have more than 400 Snaps that connect with ERP, CRM, HCM, Hadoop, Spark, analytics, identity management, social media, online storage, relational, columnar, and key-value databases and technologies such as XML, JSON, OAuth, SOAP, and REST, to name a few. By having a comprehensive set of endpoints, SnapLogic can increase the value of big data processing by including a greater variety of data.

SnapLogic extends integration from your traditional IT integrators to the LoB ad hoc integrators through its visual programming interface. The SnapLogic visual designer makes it simple for LoB users, integration specialists, and IT to snap together integrations in hours, not days or weeks – with no coding required.

With data transforming into the new currency, enterprises that become truly data driven will gain a competitive advantage. We have seen the movement of data and applications to the cloud, and now we are seeing big data processing moving to the cloud. Your big data processing solutions will continue to evolve just as your SaaS and data have before them. Are you ready? SnapLogic can support it all and is here for your migration to big data processing in the cloud.

1. [Source: Gartner: Derive Value From Data Lakes Using Analytics Design Patterns Sept 2017]