In the world of distributed web services and Big Data, errors and faults can not be treated as frequent occurrences. Rather, they are commonplace and must be understood and managed. Errors can take the form of bad or missing data, and faults can arise from web service congestion or failures. The role of an iPaaS (Integration Platform as a Service) is to help users perform integration tasks in the presence of errors and faults.

First, let’s be clear on the difference between errors and faults:

- An integration error usually refers to some form of data inconsistency due to data corruption, missing data, or unexpected data formats. The communication channels and integration pipeline execution all work as expected, but the data itself has a problem.

- On the other hand a fault is the result of a connection or service failure. In some cases, the iPaaS servers may experience failures that results in faults, which must also be managed.

The SnapLogic Integration Cloud architecture provides both data error handling and fault tolerance in order to ensure the reliable execution of integration data flows, called pipelines. Pipelines can be designed to handle bad data using error views, and pipeline segments can be boxed in a way to provide guaranteed delivery in the presence of network or service end point failure. In the data plane, our Snaplex clusters are designed to detect node failure and to ensure there is always a stable set of Snaplex nodes available to run pipelines. Finally, we also provide redundancy and reliability throughout the control plane. In this blog, we will share details on error handling. Please be on the lookout for an additional blog post that explains how SnapLogic handles fault tolerance and resiliency in the architecture.

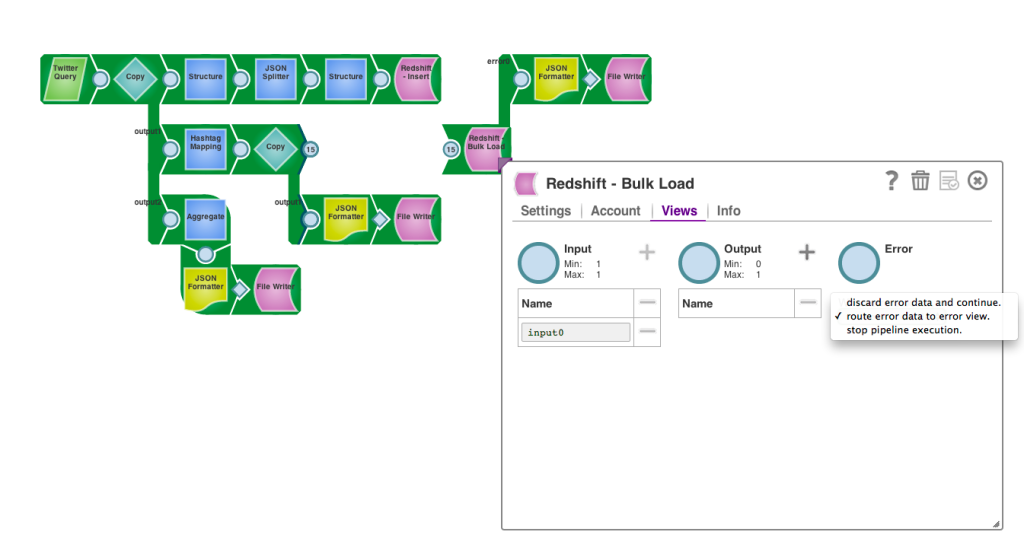

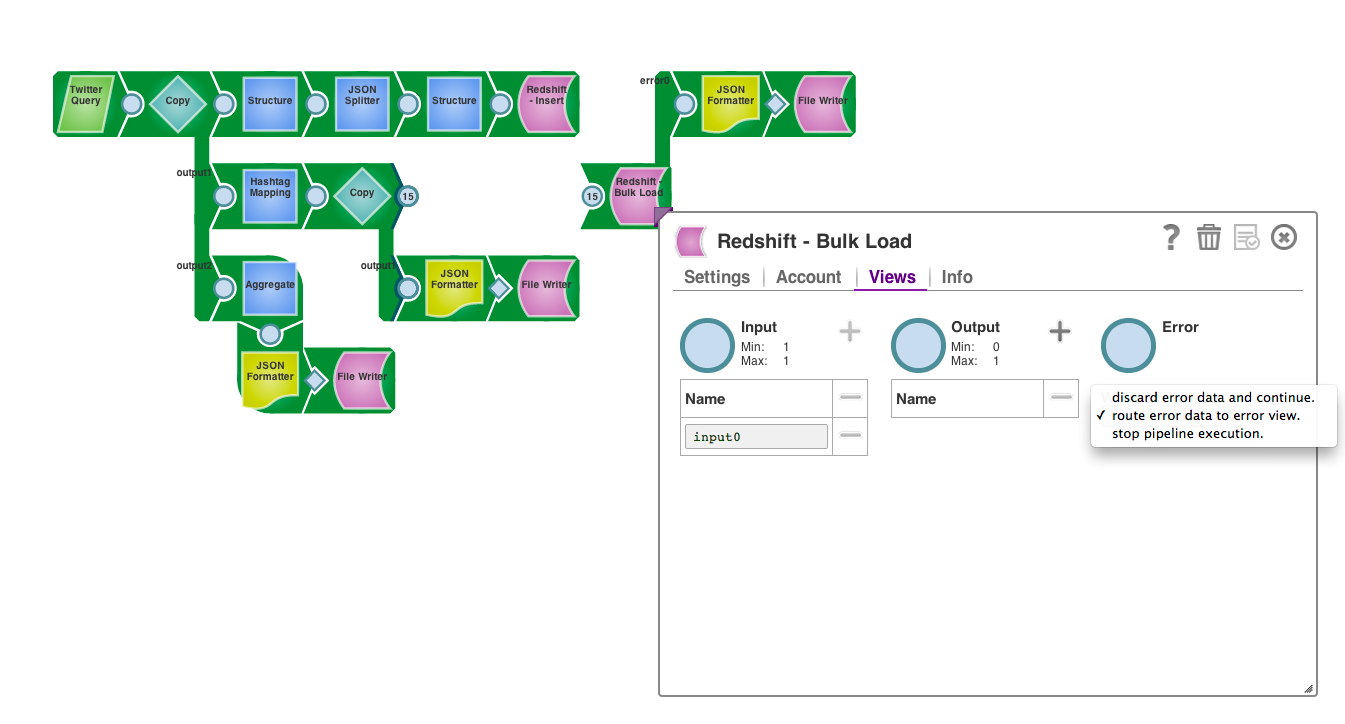

Error Views

SnapLogic Integration Cloud pipelines consist of Snaps that are connected together using views. Snaps can have zero or more input views and zero or more output views. For example, a database reader will have zero input views and one output view. A router Snap will have one input view and multiple output views. In addition to the output views, most Snaps can be configured to have an error view. Snap errors will usually cause a Snap and the pipeline to fail early. However, in some cases, a pipeline developer may want to manage error condition explicitly. A common error is missing data or bad data. If there is no error view then missing or bad data will cause the pipeline to fail. However, with an error view, the error condition is treated like data and can be passed on to a pipeline segment. In this way, a pipeline segment can be used to log the error to fix up bad data. This powerful feature allows developers to seamlessly handle both good and bad data using Snaps and pipeline segments. In some cases, certain types of faults can be communicated as errors to allow the pipeline developer to build reliable pipeline execution, such as when a database connection fault can be realized as a document sent to an error view.

As an example, some records in a CSV file may be missing one or more fields. Our CSV Reader Snap can detect these error records and send them to the error view. The error records can be sent to a pipeline segment that can attempt to clean up the data or log it for later inspection. As another example, the Email Sender Snap has one available error view. If the Email Sender error view is enabled, it will be given a document for each bad address or unsent message. In this way, the pipeline developer can create a report for the bad addresses or use the bad addresses to update a contact database so that no future attempts will be made to send to the address.

Guaranteed Delivery

The SnapLogic Integration Cloud enables the integration of several cloud services. Most modern cloud services are exposed via REST or SOAP interfaces over HTTP. However, the public network can be susceptible to failure that can cause service requests to be dropped. To help managed service connection failures the SnapLogic Integration Cloud supports guaranteed delivery of documents. To enable guaranteed delivery, the pipeline developer marks a streaming pipeline segment. This boxed segment can be configured with a retry policy. Documents that are to be sent to the boxed segment are temporarily held in persistent storage. Once a document has made it through the entire segment, an acknowledgement is sent to the pipeline manager which allows the document to be removed from the persistent storage. If the segment or segment endpoint fails the retry policy will be invoked to retrieve the document from the persistent storage and send it through the segment again. Retry policies such as linear wait times or exponential backoff can be employed to manage most intermittent endpoint failure scenarios.

For more details on the architecture of the SnapLogic Integration Cloud, check out our series of videos explaining our product and platform.