Most companies only use 5 to 10 percent of the data they collect. So estimates Beatriz Sanz Sai, a 20-year veteran in advanced analytics and the head of Ernst & Young’s global data and analytics practice. While it’s impossible to validate such a claim, the fact is many organizations are gathering lots of data but analyzing little of it.

Legacy database management systems shoulder much of the blame for this. They hog time and resources for the sake of storing, managing, and preparing data and thus impede analytics.

The rise of big data will only make matters worse. Just consider the manifold data generated each day in relational databases, SaaS applications, mobile apps, online ads, and social media. Oh, and then there’s the Internet of Things (IoT). Gartner expects 20.4 billion IoT devices to occupy our world by 2020 – 20 billion items that will breed cosmic amounts of data. And it’s not just the vast quantities of data that threaten to upend legacy systems; data also is growing more heterogeneous. One survey reveals that, on average, enterprises use a whopping 1,181 cloud services, many of which produce unique data. Technology debt, as well as the growing size and complexity of data, will likely push legacy systems to a breaking point.

To compete in the age of big data, organizations must look to the cloud. Modern, cloud-based data architectures are the only viable option for overcoming the three major hurdles legacy systems present to analytics.

1. The perils of provisioning

Before the advent of cloud data services, organizations had no choice but to build, provision, and maintain their own data infrastructure – a costly, code-heavy affair. This meant that any time you needed to store or query more data, you had to ensure you possessed the memory and computing power to back it up. As a result, IT departments would exhaust considerable time and energy measuring RAM, purchasing hardware and extra storage for high-usage times, installing servers, and engaging in other activities which, in themselves, did not yield data insights.

Provisioning servers was merely a cumbersome prerequisite to analytics. Nowadays, it’s a barrier.

Today, cloud computing providers like Amazon, Microsoft, and Google can manage your data infrastructure for you. Drawing from huge, ultra-fast data centers, they dole out the exact amount of storage and computing power you need at a given time. Unlike on-premises data warehouses, cloud alternatives like Amazon Redshift, Snowflake, and Google BigQuery make it easy to scale as your storage and processing needs change. And they do it at 1/10th of the cost in some cases.

Most importantly, cloud data services free up time for analytics. Just ask MANA Partners. The New York-based trading, technology, and asset management firm increased its output of quantitative research by 4X after adopting Google Cloud Platform, an infrastructure as a service (IaaS) solution.

Storage and processing limitations needn’t throttle your analytics anymore.

2. No self-service

Another chronic issue with on-premises database management systems is they require far too much arduous coding. Only those with high technical acumen – typically a select few within IT – can navigate such systems. And even they struggle to use them.

As you might expect, the path to analytics in a legacy environment is long and winding. An expert developer must undergo several complicated steps, not the least of which is the extract, transform, and load (ETL) process. Here, the developer must forge integrations and move data from production databases into a data lake or data warehouse, all largely through writing tedious code. In an on-premises setting, this can take weeks or even months. All the while, insights that could help cut costs and drive revenue go undiscovered. These problems only get worse as you graft in more data sources.

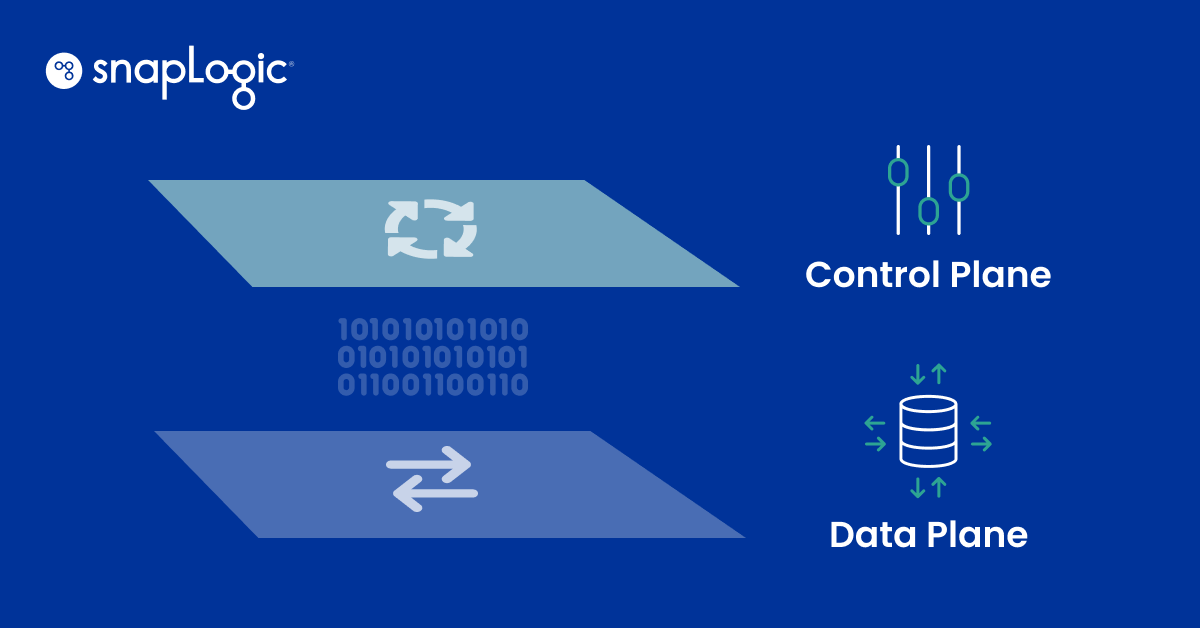

Cloud alternatives are much easier to use and virtually obviate the need to code altogether. For example, SnapLogic, a cloud-based integration platform as a service (iPaaS) solution, enables both citizen integrators and experienced data architects to rapidly build data pipelines using a drag-and-drop interface. In one case, a multi-billion-dollar manufacturer of beauty products cut its integration processes from three weeks to three hours with SnapLogic.

That’s nearly an extra 120 hours that can now be devoted to gaining new insights through analytics.

3. Rows are slow

Even if you stripped legacy databases of their complexity and converted them into self-service apps, they still wouldn’t deliver fast analytics. That’s because on-premises systems store data in a row orientation. Never mind the fact that your IT team would have spent an inordinate amount of time converting the data into neat rows and columns for storage. When you query a row-based table, the system has to sift through all the data in each row, including irrelevant fields, before extracting the data you need. This makes for slow queries and poor performance, especially when soliciting a large data set. On-premises data warehouses severely limit your ability to create reports on the fly, retrieve data quickly, and run complex queries.

Column-oriented tables, on the other hand, ignore impertinent fields and rapidly seize the data you need. As a result, they’re able to deliver fast analytics. Moreover, column stores are designed to handle the ocean of disparate data permeating our world.

To put this in perspective, an Amazon customer migrated 4 billion records of data from their on-premises data warehouse to Amazon Redshift and reported an 8X improvement in query performance. What’s more, it took the on-premises system 748 seconds to complete one query, while Amazon Redshift processed the same query in 207.

The time you spend waiting for insights is vastly shorter with cloud data warehouses than with legacy incumbents.

The clock is ticking

Investments in cloud data services continue to climb. IDC predicts that global spending on public cloud services and infrastructure will surge to $160 billion by the end of 2018, marking a 23.2% jump from the year before. Those who sit idly by, content to stay on-premises, put themselves at risk.

Legacy shops will increasingly struggle to bear the weight of big data. And while they’re busy provisioning servers, hand-coding integrations, and buckling under technology debt, their cloud-driven competitors will be using analytics to expand their industry dominance.

How long can organizations survive if they only use five percent of the data they collect? We can’t know for sure. But what is becoming clearer is that market share belongs to those who move their data architecture to the cloud. The sooner, the better.