With LLMs, you can generate software with a new programming language: English (or whatever your native tongue is). Will prompt engineering replace the coder’s art or will software engineers who understand code still have a place in future software lifecycles? We talked to Greg Benson, Professor of Computer Science at the University of San Francisco and Chief Scientist at SnapLogic, about prompt engineering, its strengths and limitations, and whether the future of software will require an understanding of code.

Understanding prompt engineering

A lot of programming languages have sought to abstract away the nitty-gritty of machine code through natural language-like code. With LLMs, prompt engineering seems like the culmination of that. What do developers have to consider when writing prompts to treat them like a programming language?

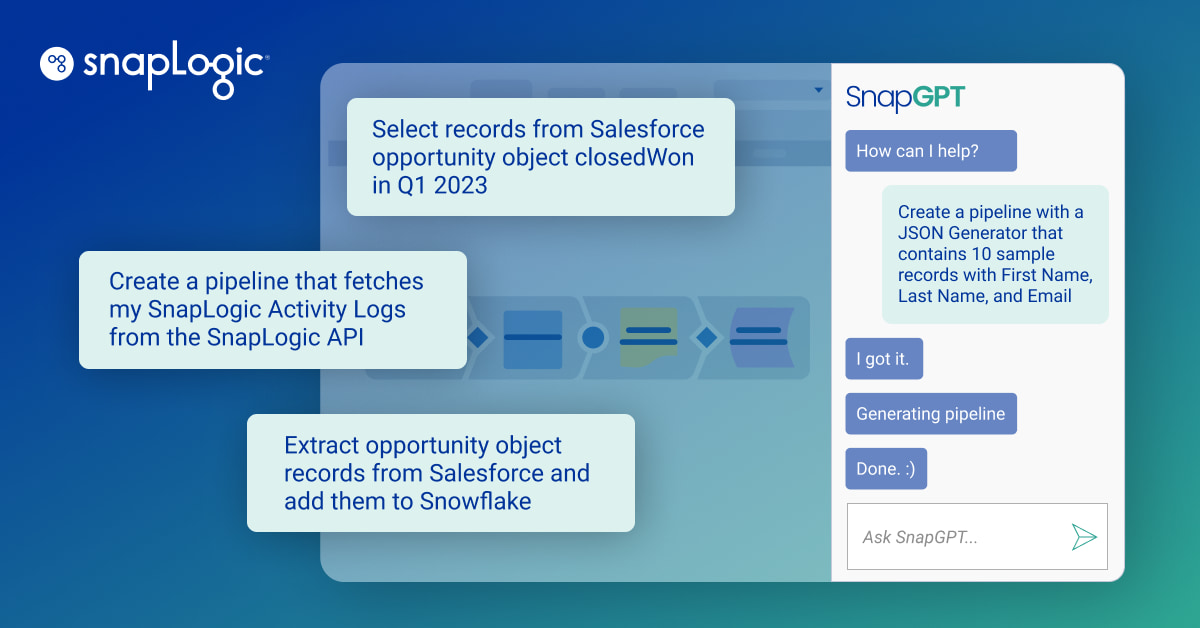

Early declarative languages, like SQL, have attempted to find a balance between structured code and a vocabulary that is closer to natural language. However, SQL is fundamentally a structured language with a formal syntax. While natural language processing (NLP) has been around for quite some time, it wasn’t until the advent of LLMs that the holy grail of going from human intent in natural language to code became a practical reality. LLMs have opened up the possibility of using natural language to describe a desired program, expression, or result. LLMs are particularly good at extracting data from unstructured and multimodal sources, summarizing text, and synthesizing new data and code.

In many cases, prompts and LLMs can be used to either replace complex code, or perform functions that would be nearly impossible to achieve in conventional code or time-consuming to formulate as a conventional machine learning problem in which lots of labeled data is needed to train specialized models. In this way, you can think of the prompt as a program. Or rather, English and other languages have become new programming languages.

However, treating prompts as “code” has some caveats that need to be considered. A prompt that works well with one LLM may not work well with another LLM. Furthermore, the frontier models improve frequently, which means a prompt that worked well with ChatGPT-4 may or may not work as well or better with ChatGPT-4o. Also, even with setting the randomness parameter (temperature as it is called) to 0.0, LLMs running on large GPU clusters can still result in different outputs based on the same input from one LLM inference to another. This fact makes incorporating LLMs into an application challenging. It requires a good way to evaluate the task you want to have the LLM perform so you can track it from LLM to LLM and version to version. Managing this unpredictability is the current price that must be paid to take advantage of the power of LLMs.

Behind the scenes, of course, prompts will be converted into code in a programming language, which is then compiled or interpreted into machine code. Is there some amount of control lost by writing in the higher-level abstracted prompt (even in programming languages)?

To clarify, prompts are not converted to code, but rather you can use prompts and LLMs as a replacement for code in many cases. So, rather than having the LLM generate code, you can design a prompt to use an LLM to perform a task on given input data. In this case, there is no conversion of the prompt to code. Rather, the input data is combined with the prompt and sent directly to the LLM for inference. You can think of the result of the inference as the output of a function you have asked the LLM to perform.

In this case, the prompt and input data are converted into a numeric representation (tokens) which are then given as input to the LLM, which iterates over the token sequence to generate a result that is then converted into text and returned from the LLM as the result. Using an LLM in this way you are relying on the LLM to interpret your prompt correctly on expected input data. How the LLM arrives at its result is abstracted in the sense that the inference is subject to the original training data and the structure of the neural network. So, for this path, the LLM is a bit of a black box and you are losing control. This is why you need to employ rigorous evaluation techniques to ensure that your prompts, input data, and LLMs are giving you the results you expect.

Adapting skills to a new environment

Are there any skills that may be lost as we shift from programming to NL prompting?

This is a hotly-debated question in computer science education. As the LLMs get better and better at generating code and as we can use natural language to describe desired behavior, what is the role of conventional computer science and computer programming? There will likely be an entire generation of technical workers who will be able to generate useful computer programs without a formal computer science degree. For these workers, they won’t have the same type of foundational knowledge and skills that computer scientists have today. I want to believe that there will continue to be a need for computer scientists, just like there will continue to be a need for physicists and biologists. Albeit, how they learn and do their work may be greatly changed through the use of LLMs. For me personally, LLMs have greatly accelerated the way I learn new concepts and material.

Do you think developers will start to crave agency and push back? At what point do these tools feel like they add productivity and at what point might developers feel like they have become copy editors for AI engines?

I think many developers are resisting using coding assistants today precisely because they don’t want to lose their own agency and control over the art of software development. I think there is a real concern in the over-reliance of LLM generated code. On the one hand, I can implement prototype systems much faster with the help of LLMs, like generating a complete JavaScript frontend application, which allows me to explore ideas through working code rapidly. On the other hand, what will be the human oversight needed to trust any resulting generated code that will be put into production? Especially for life-critical systems, like surgical robots. I think the future state of the relationship between human developers and LLM generated code is still evolving. I think there is a path forward in which humans build systems and strategies that leverage LLMs in a way to ensure trust in the process and will put humans in the loop, but not be relegated to merely copy editors.

Are there things that a prompt can do that code cannot?

Yes, prompts and LLMs can describe functions that would be nearly impossible to write as code. There are obvious ones like sentiment analysis or summarization, but the real power comes in generalizing from just a few examples. Accurate information extraction from documents, like PDFs, is notoriously difficult to write as code. Putting examples of desired extracted data into a prompt helps the LLM “learn” how to apply the pattern to future input documents. You may be able to do this with traditional machine learning but at a very high cost in terms of time and the data science skill level needed to train and test such models.

Utilizing large language models

How much does the particular LLM matter to a prompt engineer? Can you pick any you like or are there specific features/sizes/training data that should be considered?

While there appears to be some convergence in some capabilities of the frontier models, there are still variances that may impact your specific use case. There are also thousands of specialized LLMs that can be downloaded from Hugging Face that are good in specific domains, like time series analysis to name one. The costs and capabilities are still changing and a prompt engineer will want to become familiar with the major LLMs. Tracking open-source LLMs will also be important because these offer privacy and security not achievable by the LLM cloud provider.

Can you improve prompt results with the right data?

Yes, LLM results can be influenced by the right example data you choose to add to your prompt. Furthermore, fine-tuning is becoming more commonplace, which means you can provide large amounts of example data to a model that would not fit into a prompt itself. Understanding what types of examples to provide will be very dependent on the specific use case. This is an area that feels more like scientific experimentation than software development because you need to come up with a prompt and data example hypothesis, and then you need a way to evaluate your hypothesis. This can lead to lots of trial and error.

Advice for future engineers

Will we all just be prompt engineers in the future or will there always be a need for folks who understand programming languages?

My hope is that there will always be a need for human experts in every domain, including computer science and programming languages. To suggest otherwise means that we would be subjugating the advancement of civilization to AI. I see a future in which we use LLMs to accelerate software development and accelerate human learning of software and languages so we benefit from both the power of LLMs and human creativity and knowledge.

What would your advice to graduating CS majors and freshly-minted junior engineers be as they contend with a new landscape in the world of software development?

I tell my computer science students, you are at an amazing time in computer history. In my lifetime, this is the most significant technological advancement that we have seen, and in the next five years, everything changes. Everything changes from how companies run and operate, to efficiencies in business, to creating new types of jobs. We’re in a transformative time, and in some ways, it’s an experiment, just like a lot of technologies we have seen, like crypto. So we need to embrace the opportunities at hand. You have to embrace it, as there’s a proliferation of coding assistance that is changing both software development and computer science education. How we engage with it is not something that can be ignored.

This is adapted from an article originally published on the StackOverflow blog.