Dependable system operation is a requirement for any serious integration platform as a service (iPaaS). Often, reliability or fault tolerance is listed as a feature, but it is hard to get a sense of what this means in practical terms. For a data integration project, reliability can be challenging because it must connect disparate external services, which fail on their own. In a previous blog post, we discussed how SnapLogic Integration Cloud pipelines can be constructed to manage end point failures with our guaranteed delivery mechanism. In this post, we are going to look at some of the techniques we use to ensure the reliable execution of the services we control.

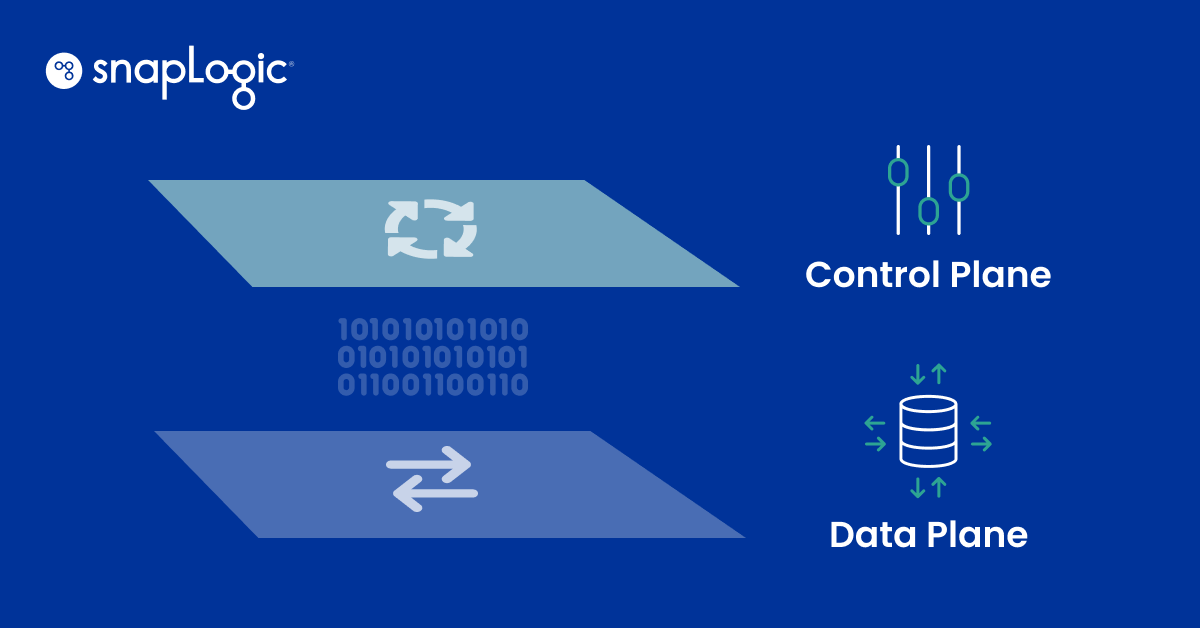

We broadly divide the SnapLogic architecture into two categories: the data plane and the control plane. The data plane is encapsulated within a Snaplex and the control plane is a set of replicated distributed servers. This design separation is useful both for data isolation and for reliability because we can easily employ different approaches to fault tolerance into the two planes.

Data Plane: Snaplex and Pipeline Redundancy

The Snaplex is a cluster of one or more pipeline execution nodes. A Snaplex can reside both in the SnapLogic Integration Cloud or on-premises. The Snaplex is designed to support autoscaling in the pretense of increased pipeline load. In addition, the Monitoring Dashboard monitors the health of all Snaplex nodes. In this way, Snaplex node failure can be detected early so that future pipelines are not scheduled on the faulty node. For cloud-based Snaplexes, also known as Cloudplexes, node failures are detected automatically and replacement nodes are made availably seamlessly. For on-premise Snaplexes, aka Groundplexes, admin users are notified of the faulty node so that a replacement can be made.

If a Snaplex node fails during a pipeline execution, the pipeline will be marked as failed. Developers can choose to retry failed pipelines or in some cases, such as recurring scheduled pipelines, the failed run may be ignored. Dividing long running pipelines into several shorter pipelines can limit exposure to node failure. For highly critical integrations it is possible to build and run replicated pipelines concurrently. In this way a single failed replica won’t interrupt the integration. As an alternative, a pipeline can be constructed to stage data in the SnapLogic File System (SLFS) or in an alternate data store such as AWS S3. Staging data can mitigate the need to re-acquire data from a data source, for example, if a data source is slow such as AWS Glacier. Also, some data sources have higher transfer costs or have transfer limits that would make it prohibitive to request data multiple times in the presence of failures on the upstream end point in a pipeline.

Control Plane: Service Reliability

SnapLogic’s “control plane” resides in the SnapLogic Integration Cloud, which is hosted in AWS. By decoupling control from data processing, we provide differentiated approaches to reliability. All control plane services are replicated for both scalability and for reliability. All REST-based front end servers sit behind the AWS ELB (Elastic Load Balancing) service. If any control plane service fails, there will always be a pool of replicated services available that can service client and internal requests. Here is an example where redundancy helps both with reliability and scalability.

We employ ZooKeeper to implement our reliable scheduling service. An important aspect of the SnapLogic iPaaS is the ability to create scheduled integrations. It is important that these scheduled tasks are initiated at a specified time or the required intervals. We implement the scheduling service as a collection of servers. All the servers can accept incoming CRUD requests on tasks, but only one server is elected as the leader. We use a ZooKeeper-based leader election algorithm for this purpose. In this way, if the leader fails, a new leader will be elected immediately and resume scheduling tasks on time. We ensure that no scheduled task is missed. In addition to using ZooKeeper for leader election, we also use it to allow the follower schedulers to notify the leader of task updates.

We also utilize a suite of replicated data storage technologies to ensure control and that metadata exists in a reliable manner. We currently use MongoDB clusters for metadata and encrypted AWS S3 buckets for implementing SLFS. We don’t expose S3 directly, but rather provide a virtual hierarchical view of the data. This allows us to track and properly authorize access to the SLFS data.

For MongoDB we have developed a reliable read-modify-write strategy to handle metadata updates in a non-blocking manner using findAndModfy. Our approach results in highly efficient non-conflicting updates, but is safe in the presence of a write conflict. In a future post we will provide a technical description of how this works.

The Benefits of Software-Defined Integration

By dividing the SnapLogic elastic iPaaS architecture into the data plane and the control plane we can employ effective, but different, reliability strategies between these two classes. In the data plane we help both identify and correct Snaplex server failures, but also allow users to implement highly reliable pipelines as needed. In the control plane we use a combination of server replication, load balancing and ZooKeeper to ensure reliable system execution. Our one size does not fit all approach allows us to modularize reliability and employ targeted testing strategies. Reliability is not a product feature, but an intrinsic design feature in every aspect of the SnapLogic Integration Cloud.