This week we announced our Series D financing, led by Ignition Partners. Frank Artale, Managing Partner at Ignition had this to say:

This week we announced our Series D financing, led by Ignition Partners. Frank Artale, Managing Partner at Ignition had this to say:

“The inescapable shift towards big data infrastructure and cloud applications in the enterprise presents a tremendous opportunity for a new approach to data connectivity and self-service. SnapLogic’s modern approach to data access, shaping and streaming positions the company well to capitalize on a tremendous market opportunity.”

While we continue to deliver powerful elastic integration platform as a service (iPaaS) for connecting and synchronizing cloud and on-premises business applications (Salesforce, ServiceNow, SAP, Workday, Zuora, etc.) over the past few releases, we’ve broadened the capabilities of our unified platform to address the growing need for big data integration. Greg Benson, our Chief Scientist, summarized SnapLogic’s big data processing platforms in this post and was was recently featured in an Integration Developer News article, which reviewed our Fall 2014 release. When it comes to Spark, Greg noted in Cloudera’s most recent announcement that:

“SnapLogic is adding native support for Apache Spark as part of CDH in upcoming releases of our Elastic Integration Platform. In the first phase, we are adding a Spark Snap that taks advantage of a Spark cluster co-located with our Snaplex processing engine. This allows SnapLogic pipelines to stream data into a Spark resilient distributed dataset (RDD). Our goal is to make it easy to deliver data to Spark from disparate sources such as conventional databases, cloud applications, APIs and any SnapLogic-supported destination. Further applications of Spark include combining our SnapReduce computations and Spark computations into coordinated workflows via Snaplogic pipelines and providing the data wrangling capabilities that will allow organizations to double the productivity of their data scientists.”

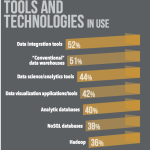

At #Hadoopworld in New York last week, this Wikibon chart caught my attention – 52% of respondents listed data integration tools as the big data tools and technologies that are in use today. But whether it’s requirements for data parsing or streaming, the need to handle new and different data formats and locations (i.e. cloud) or the demand for self-service from citizen integrators, traditional extract, transform and load (ETL) tools built for rows and columns will struggle with big data integration use cases.

At #Hadoopworld in New York last week, this Wikibon chart caught my attention – 52% of respondents listed data integration tools as the big data tools and technologies that are in use today. But whether it’s requirements for data parsing or streaming, the need to handle new and different data formats and locations (i.e. cloud) or the demand for self-service from citizen integrators, traditional extract, transform and load (ETL) tools built for rows and columns will struggle with big data integration use cases.

Check out this detailed demonstration of SnapReduce and the SnapLogic Hadooplex to see the kinds of advantages the SnapLogic Elastic Integration Platform delivers: