In the previous post, we discussed what SnapLogic’s Hadooplex can offer with Spark. Now let’s continue the conversation by seeing what Snaps are available to build Spark Pipelines.

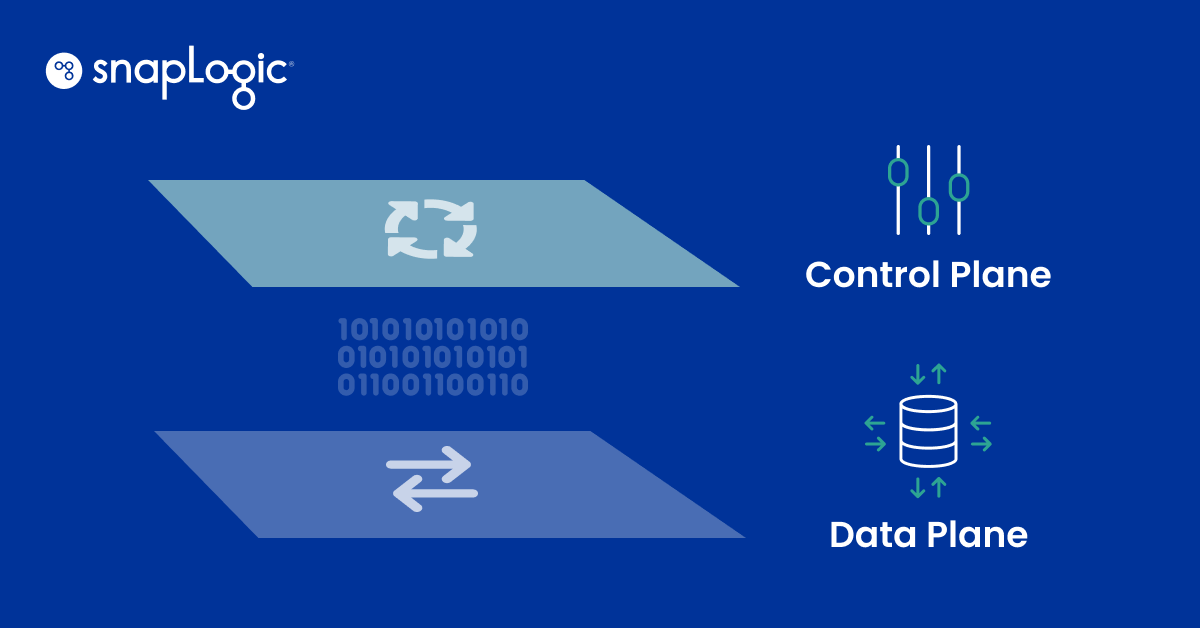

The suite of Snaps available in the Spark mode enable us to ingest and land data from a Hadoop ecosystem and transform the data by leveraging the parallel operations such as map, filter, reduce or join on a Resilient Distributed Datasets (RDD), which is a fault-tolerant collection of elements that can be operated on in parallel.

There are various formats available for data storage in HDFS. These file formats support one or more compression formats that affect the size of data stored in the HDFS file system. The choice of file formats and compression depends on various factors like desired performance for read or write specific use case, desired compression level for storing the data.

The Snaps available for ingestion and landing data in Spark pipeline are HDFS Writer, Parquet Writer, HDFS Reader, Sequence Parser, Parquet Reader, and Sequence Formatter.

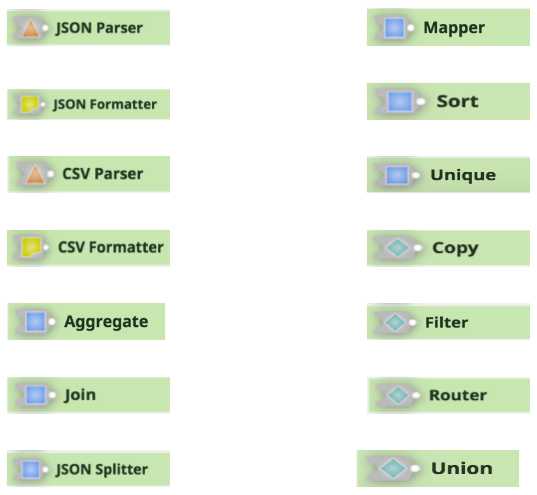

The other Snaps in Spark pipeline for supporting transform and flow of data are JSON Parser, Mapper, JSON Formatter, Sort, CSV Parser, Unique, CSV Formatter, Copy, Aggregate, Filter, Join, Router, JSON Splitter, and Union.

You can learn how to build and execute Spark Pipelines for HDInsight, watch a SnapLogic Spark demo, or contact us for more information about SnapLogic’s Spark big data integration solutions.