This post originally appeared on Glenn Donovon’s blog.

I’ve recently decided to take a hard look at cloud iPaaS (integration platform as a service) and in particular, SnapLogic due to a good friend of mine joining them to help build their named account program. It’s an interesting platform which I think has the potential to help IT with the “last mile” of cloud build-out in the enterprise, not just due its features, but rather because of the shift in software engineering and design occurring that started in places like Google, Amazon and Netflix – and startups that couldn’t afford and “enterprise technology stack” – and is now making its way into the enterprise.

However, while discussing this with my friend, it became clear that one has to understand the history of integration servers, EAI middleware, SOA, ESB, and WS standards to put into context the lessons that have been learned along the way regarding actual IT agility. But let me qualify myself before we jump in. My POV is based on being an enterprise tech sales rep who sold low latency middleware, and standards based middleware, EAI middleware, a SOA grid-messaging bus as well as applications like CRM, BPM and firm-wide market/credit risk management, which have massive system integration requirements. While I did some university and goofing off coding in my life (did some small biz database consulting for a while), I’m not an architect, coder or even a systems analyst. I saw up close and personal why these technologies were purchased, how they evolved over time, what clients got out of them and how all our plans and aspirations played out. So, I’m trying to put together a narrative here that connects the dots for people other than middleware developers and CTO/Architect types. Okay, that said, buckle up.

The Terrain

Let’s define some terms – middleware is a generic a term – I’m using it to refer to message buses/ESBs and integration servers (EAI). The history of those domains led us to our current state and helps make clear where we need to go next, and why the old way of doing systems integration and building SOA is falling away in favor of RESTful web services micro-services based design in general.

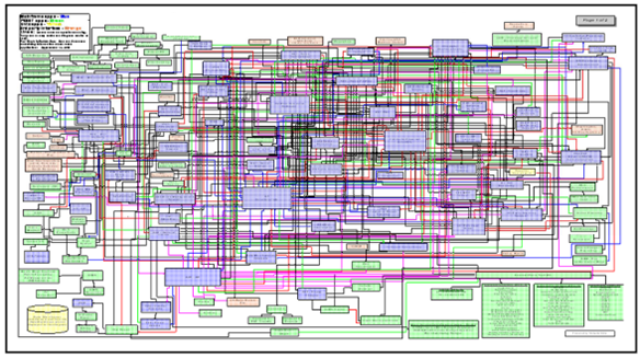

Integration servers – whether from IBM or challengers in those days like SeeBeyond (who I worked for), the point of an integration server was to stop the practice of hand writing code for each system/project to access data/systems. These were often referred to as “point to point” integrations in those days, and when building complex systems in large enterprises before integration servers, the data flows between systems often looked like a plate of spaghetti. One enterprise market risk project I worked on at Bank of New York always comes to mind when I think of this. The data flows of over 100 integration points from which the risk system consumed data had been laid out in one diagram and we actually laminated it on 11×17 paper, making a sort of placemat out of it. It became symbolic of this type of problem for me. It looked kind of like this:

(Image attribution to John Schmidt, recognized authority in the integration governance field and author of books on Integration Competency Centre and Lean Integration)

(Image attribution to John Schmidt, recognized authority in the integration governance field and author of books on Integration Competency Centre and Lean Integration)

So, along came the “integration server”. The purpose was to provide a common interface and tools to use to connect systems without writing code from scratch so integrations between systems would be easy to build and maintain, while also being secure and performing well. Another major motivation was to loosely couple target systems and data consuming systems to isolate both from changes in the underlying code in either. The resulting service was available essentially as an API, they were proprietary systems, and in a sense they were ultimately black boxes from which you consumed or contributed data. They did the transforms, managed traffic, loaded the data efficiently etc. Of course, there were also those dedicated to bulk/batch data as well such as Informatica and later on Ab Initio, but it’s funny, these two very related worlds never came together into a unified offering successfully.

This approach didn’t change how software systems were designed, developed or deployed in a radical way though. Rather, the point was to isolate integration requirements and do them better via a dedicated server and toolkit. This approach delivered a lot of value to enterprises in terms of building robust systems integrations, and also helped large firms adopt packaged software with a lot less code writing, but in the end it offered only marginal improvements in development efficiency, while injecting yet another system dependency (new tool, specialized staff, ongoing operations costs) into the “stack”. Sure you could build competency centers and “factories” but to this day, such approaches end up creating more bureaucracy, more dependencies and complexity while adding less and less value compared to what a developer can build him/herself with RESTful services/micro-services, and most things one wants to integrate with today already have well defined APIs so it’s often much easier to connect and share data anyway. Innovative ideas like “eventually consistent data” and the incredible cost advantages and computing advantages of open source versus proprietary technologies, in addition to public IaaS welcoming such computing workloads at the container level, well let’s just say there is much more changing than just integration. Smart people I talk to tell me that using an ESB as the backbone of a micro-services based system is contrary to the architecture.

Messaging bus/ESB – This is a system for managing messaging traffic on a general purpose bus from which a developer can publish, subscribe, broadcast and multi-cast messages from target and source services/systems. This platform predates the web, fyi, and was present in the late ’80s and ’90s in specialized high speed messaging systems for trading floors, as well as in manufacturing and other industrial settings where low latency was crucial and huge traffic spikes were the norm. Tibco’s early success in trading rooms was due to the fact they provided a services based architecture for consuming market data which scaled and also allowed them to build systems using services/message bus design. These allowed such systems to approach the near-real time requirements of such environments, but they were proprietary, hugely expensive and not at all applicable for general purpose computing. However, using a service and bus architecture with pub/sub, broadcast, multi-point and point to point communications available as services for developers was terrific for isolating apps from data dependencies and dealing with the traffic spikes of such data.

Over time, it became clear that building systems out of services had much general promise (inheriting basic ideas from object oriented design actually), and that’s when the idea of Web Services began to emerge. Entire standards bodies were formed and many open source and other projects spun off to support the standards based approach to web services system design and deployment. Service Oriented Architectures became all the rage – I worked on one project for Travelers Insurance while at SeeBeyond where we exposed many of their mainframe applications as web services. This approach was supposed to unleash agility in IT development.

But along the way, a problem became obvious. The cost, expertise, complexity and time involved in building such elegantly designed and governed systems frameworks ran counter to building systems fast. A good developer could get something done that worked and was high quality without resorting to using all those WS standardized services and conforming to its structure. Central problems included using XML to represent data because the processing power necessary for transforming these structures was always way too expensive for the payoff. SOAP was also a highly specialized protocol, injecting yet another layer of systems/knowledge/dependency/complexity to building systems with a high degree of integration.

The entire WS framework was too formalized, so enterprising developers said, ‘how could I use a service based architecture to my advantage when building a system without relying on all of that nonsense? This is where RESTful web services come in, and then JSON, which changes everything. Suddenly, new, complex and sophisticated web services began to be callable just by HTTP. Creating and using such services became trivially easy in comparison to the old way. Performance and security could be managed in other ways. What was lost was the idea of operating with “open systems” standards from a systems design standpoint, but it turns out that it was less valuable in practice given all the overhead.

The IT View

IT leadership already suspects that the messaging bus frameworks did not give them the most important benefit they were seeking in the first place – agility – yet they have all this messaging infrastructure and all these incredibly well behaved web services running. In a way, you can see this all as part of how IT organizations are learning what real agility looks like when using services based architectures. I think many are ready to dump all that highly complex and expensive overhead which came along with messaging buses when an enterprise class platform comes along that enables them to do so.

But IT still loves integration servers. I think they are eager for a legit, hybrid-cloud based integration server (iPaaS) that gives them an easy way to build and maintain interfaces for various projects at lower cost than on ESB-based solutions while running in the cloud and on-prem. It will need to provide the benefits of a service based architecture – contractual level relationships – without the complexity/overhead of messaging buses. It needs to leverage the flexibility of JSON while providing a meta-data level control over the services, along with a comprehensive operational governance and management framework. It also needs to scale massively, and be distributed in design in order to even be considered for this central role in enterprise systems.

The Real Driver of the Cloud

What gets lost sometimes with all of our technical focus is that economics are what is mostly driving cloud adoption. With the massive cost differentials opening up in public computing versus on-prem or even outsourced data centers due to the tens of billions IBM, Google, MSFT, AWS and others are pouring into their services, the public cloud will become the platform of choice for all computing going forward. All that’s up for debate inside any good IT organization is how long this transition is going to take.

Many large IT organizations clearly see the tipping point cost-wise is at hand already with regard to IaaS and PaaS. This has happened in lots of cloud markets already – Salesforce didn’t really crush Siebel until its annual subscription fees were less than Siebel’s on prem maintenance fees. Remember, there was no functional advantage in Salesforce over Siebel. Hint: Since the economics are the driver, it’s not about having the most elegant solution rather than being able to actually do the job somehow, even if not pretty or involving tradeoffs.

Deeper hint: Give a good systems engineer a choice between navigating a nest of tools and standards and services, along with all the performance penalties, overhead, functional compromises and dependencies they bring along, versus having to write a bit more code and being mindful of systems management, and she/he will choose the latter every time. Another way of saying this is that complexity and abstraction are very expensive when building systems and the benefits have to be really large for the tradeoff to make sense, and I don’t believe that in the end, ESBs and WS standards for the backbone of SOA ever paid off in the way it needed to. And never will. The industry paid a lot to learn that lesson, yet it seems that there is a big reluctance to discuss this openly. The lesson? Simplicity = IT Agility.

The most important question is what core enterprise workloads can move to the public cloud feasibly, and that is still a work in progress. Platforms like Apprenda and other hybrid cloud solutions are crucial part of that mix as one has to be able to assign data and process to appropriate resources from a security/privacy and performance/cost POV. A future single “cloud” app built by an enterprise may have some of its data in servers on Azure in three different data centers to manage data residency, other data in on prem data stores, be accessing data from SaaS apps, and accessing still other data in super cheap simple data stores on AWS. Essentially, this is a “policy” issue and I’d say that if a hybrid iPaaS like Snaplogic can fit into those policies, and behave well with them and facilitate connecting these systems, it has a great shot at being a central part of the great rebuild of core IT systems we are going to watch happen over the next 10 years. This is because it provides the enterprise with what it needs, an integration server while leveraging RESTful services, micro-services based architectures and JSON without an ESB.

This is all coming together now, so you will see growing interest in throwing out the old integration server/message bus architectures in organizations focused on transformation and agility as core values. I think the leadership of most IT organizations are ready to leave the old WS standards stuff behind, along with bus/message/service architectures, but their own technical organizations are still built around them so this will take some time. It’s also true that message buses will not be eliminated entirely, as there is a place for messaging services in systems development – just not as the glue for SOA. And of course, low latency buses will still apply to building near-real time apps in say engineering settings or on trading floors, but using message buses as a general purpose design pattern will just fade from view.

The bottom line is that IT leaders are just as frustrated they didn’t get the agility out of building systems using messaging bus/SOA patterns as business sponsors are about the costs and latency of these systems. All the constituencies are eager for the next paradigm and now is the time to engage in dialog about this new vision. To my thinking, this is one of the last crucial parts of IT infrastructure which needs to be modernized and cloud enabled to move the enterprise computing workloads to the cloud, and I think SnapLogic has a great shot at helping enterprises realize this vision.

______

About the Author

Glenn Donovon has advised, consulted to or been an employee of 23 startups, and he has extensive experience working with enterprise B2B IT organizations. To learn more about Glenn, please visit his website.